Introduction

New artificial intelligence (AI) offerings, such as Open AI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude, have fascinated business leaders and the public alike. While the technical progress that has garnered headlines is impressive, the economic feasibility of these AI systems can fall short. For firms to justify adopting new AI capabilities, these systems must create value that exceeds their cost. However, because the upfront development costs are enormous, just achieving breakeven on AI investments will be a challenge unless firms have the deployment scope necessary to sufficiently amortize costs. Put another way, for AI to move from a few generalist systems to the myriad of specialized systems needed for deployment throughout the economy, an enormous amount of costly “last mile” customization will be needed. Whether such customization can be justified economically will depend on the performance needs of the companies deploying them and on the ability of technology providers to achieve greater scale.

To gain insight into AI adoption and job displacement, we worked with coauthors to develop a cost framework for computer vision, one of the most developed areas of AI (Svanberg et al., 2024), which we summarize in this article. That model is the first end-to-end evaluation of AI automation, incorporating the cost of labor, the technical proficiency needed from the AI system, and the economic adoption decision by firms. Overall, our AI-labor cost framework shows that labor automation should occur in two phases. In the initial phase, there is significant disruption as automation occurs for tasks that, with today’s technology, are already economically attractive to automate. In the second phase of automation, roll-out slows as new tasks must await business model innovation or large AI cost decreases to overcome their initial economic unattractiveness. Across a wide range of scenarios for this second phase, we estimate that job loss from AI automation will be less than the existing economy-wide job turnover rate, suggesting that the second phase labor displacement will be more gradual than abrupt.

In contrast to our work, much of the existing economics literature focuses on the technical feasibility of AI adoption and ignores economic feasibility. Eloundou et al. (2023) find that it is technically feasible for approximately 80% of the U.S. workforce to have at least 10% of their occupational tasks affected by the introduction of large language models (LLMs) and that “approximately 19% of workers may see at least 50% of their tasks impacted.”

While assessing the adoption potential of LLMs is important, it is difficult because the technological developments are so recent that the economics of LLM deployment, diffusion, and usage are not yet well understood. In contrast to LLMs, computer vision models have a long history, have more well-developed economic estimates, and have sufficient similarity to LLMs to produce similar economic effects.

Focusing on computer vision, we find that the difference between AI deployments being technically-feasible and economically-attractive is enormous. In particular, the last mile customizations needed to use AI across diverse business applications drive up costs, substantially changing the economics of adoption. While 80% of computer vision tasks are technically feasible to automate, after accounting for last-mile costs we find that only 23% of worker tasks, as measured by compensation, are both technically feasible and cost effective to automate. So, AI is recapitulating a pattern that we see elsewhere in IT: For many technologies, the upfront costs are so large that only big firms have the scale necessary to justify adoption.

Our analysis also allows us to project how the economics of AI adoption will change. Just as with other costly technologies, we find that AI will become more widespread as (1) deployment costs fall, making systems affordable to smaller firms, and (2) deployment scale grows, for example through the introduction of AI-as-a-service platforms.

Our analysis also suggests policy implications, including:

- Regulatory action will be needed to guard against the rise of natural monopolies, as has been experienced in social networks, search, and e-commerce. In particular, we expect substantial consolidation to occur as AI-as-a-Service platforms consolidate data and customers, particularly among providers of vital industry services such as financial services, health care, or travel.

- Substantial employment and worker retraining programs will be needed as the initial wave of AI automation replaces tasks done by humans, reconfigures processes, and creates new worker tasks.

- Labor market data and measurement programs will be needed to monitor job displacement and turnover so that job training can be adapted to changing AI capabilities and economics. On-going predictions of occupational job destruction and creation will also be needed so that educational and other training institutions can calibrate their offerings to the workforce that will be needed when their students graduate.

- Direct support of academic research is needed to ensure AI research and development priorities address the public interest and the advancement of knowledge as opposed to relying largely on industry research.

The AI-Labor Cost Framework

To understand the conditions under which a firm might adopt an AI system, we develop an AI-Labor Cost Framework that is broadly applicable. The intuition is simple: A firm will want to replace workers doing a task if an AI system could do that same task less expensively. The cost of the workers doing the task are easily summarized by their wages. The cost of the AI system is more complicated, and depends on the level of capability needed from the system and how broadly it can be deployed (which amortizes costs).

To illustrate the usefulness of the framework, we focus our attention on the area of computer vision, but the concept is generalizable across other application areas in AI. Computer vision emerged in the 1960s, at the dawn of AI, beginning with the quest to encode images and solve vision problems. Today, vision applications are widely found, for example, in reviewing diagnostic health care images and assessing products or automating processes in manufacturing. Not only are computer vision models relatively mature but some image data for training AI models are freely available. For example, ImageNet is a large visual database consisting of more than 14 million images, hand-annotated to indicate the pictured objects. The database contains more than 20,000 categories, with a typical category consisting of several hundred images. Mensink et al. (2022) and Thompson et al. (2024) exploit computer vision models and ImageNet data to study transfer learning, wherein models are trained on these broad datasets and then are customized to a particular “last mile” applications.

Two key ingredients in an AI cost framework are the cost of training a general model and the cost of customizing that model to a particular implementation. With both of these, it is possible to address important shortcomings of AI automation models that focus only on technological similarity, often called “AI exposure,” and in addition construct more economically grounded estimates of task automation. The result is the first end-to-end AI automation model.

A hypothetical example illustrates: A small bakery might consider automation with computer vision. Bakers regularly visually inspect ingredients to ensure they are of sufficient quality. Alternatively, such a task could be completed by a computer vision system. With the task separated from other elements of the production process, the business decision would hinge on cost effectiveness. Department of Labor O*NET data report that checking food quality comprises roughly 6% of a baker’s tasks. Thus, a small bakery with five bakers, each with average annual compensation of $48,000, has potential labor savings of about $14,000 per year. Those cost savings are far less than the cost of an AI system necessary to do this task, making it uneconomical to substitute labor with an AI system in this instance.

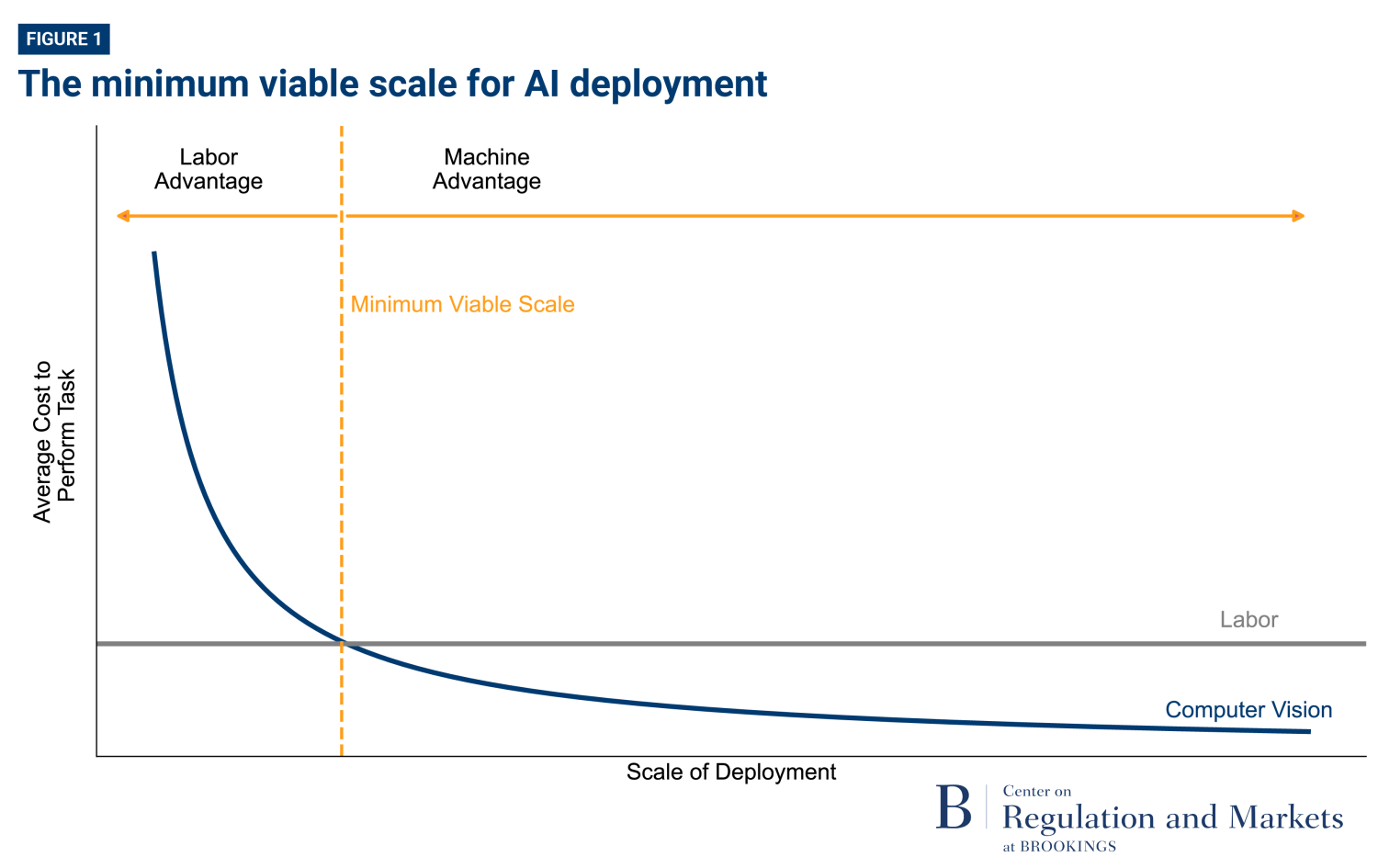

While it might not be attractive for a small bakery to automate quality inspection, the economics are much better for a larger, industrial bakery. Somewhere between these two sits the concept of “minimum viable scale.” Figure 1 illustrates this concept. Because of high initial fixed costs, the average cost of using the AI computer vision system is well above the cost of having a worker perform the task when deployments are small. But these average costs decrease as the scale of operation increases. When the average cost of using labor and using the computer vision system are equal, that deployment scale is defined as the minimum viable scale. Replacing labor with AI becomes cost-effective only when the scale of using this AI system exceeds the minimum viable scale, indicating a machine advantage.

To determine whether AI deployments are attractive, we must first have a list of tasks being done. The Department of Labor O*NET database captures this for the U.S. economy, so we hand-classify their descriptions to find the 414 tasks that could be replaced by computer vision in some way. We then surveyed those who perform these tasks to gather information on AI system performance requirements. These results allowed us to construct estimates of the cost required to achieve such performance (also drawing on our previous work: Thompson, Greenewald, et al., 2021; Thompson et al., 2022, 2024) and to model the economics of the adoption decision by firms with different deployment scales. Such estimates are possible because AI performance is driven by AI scaling laws that provide a recipe for the type of system required to achieve a given level of performance.

The threshold for whether adoption is economically-attractive is determined by whether an AI system with capabilities equivalent in accuracy to those of workers performing the same task reduces cost.1 Brynjolfsson has called this “Turing Trap” automation (Brynjolfsson, 2022). At this threshold, the benefits provided by the workers and the AI system are the same, so the economic benefit comes from labor cost savings.

The cost of the labor performing these 414 tasks comes from multiplying the wages for each position, based on the U.S. Bureau of Labor Statistics’ 2022 Occupational Employment and Wage Statistics (OEWS) data, times the fraction of a worker’s duties that each task represents. This yields the benefit to the firm of automating that task. By comparing this labor cost of a task to the AI system cost, firms can understand if automation would be economically attractive.

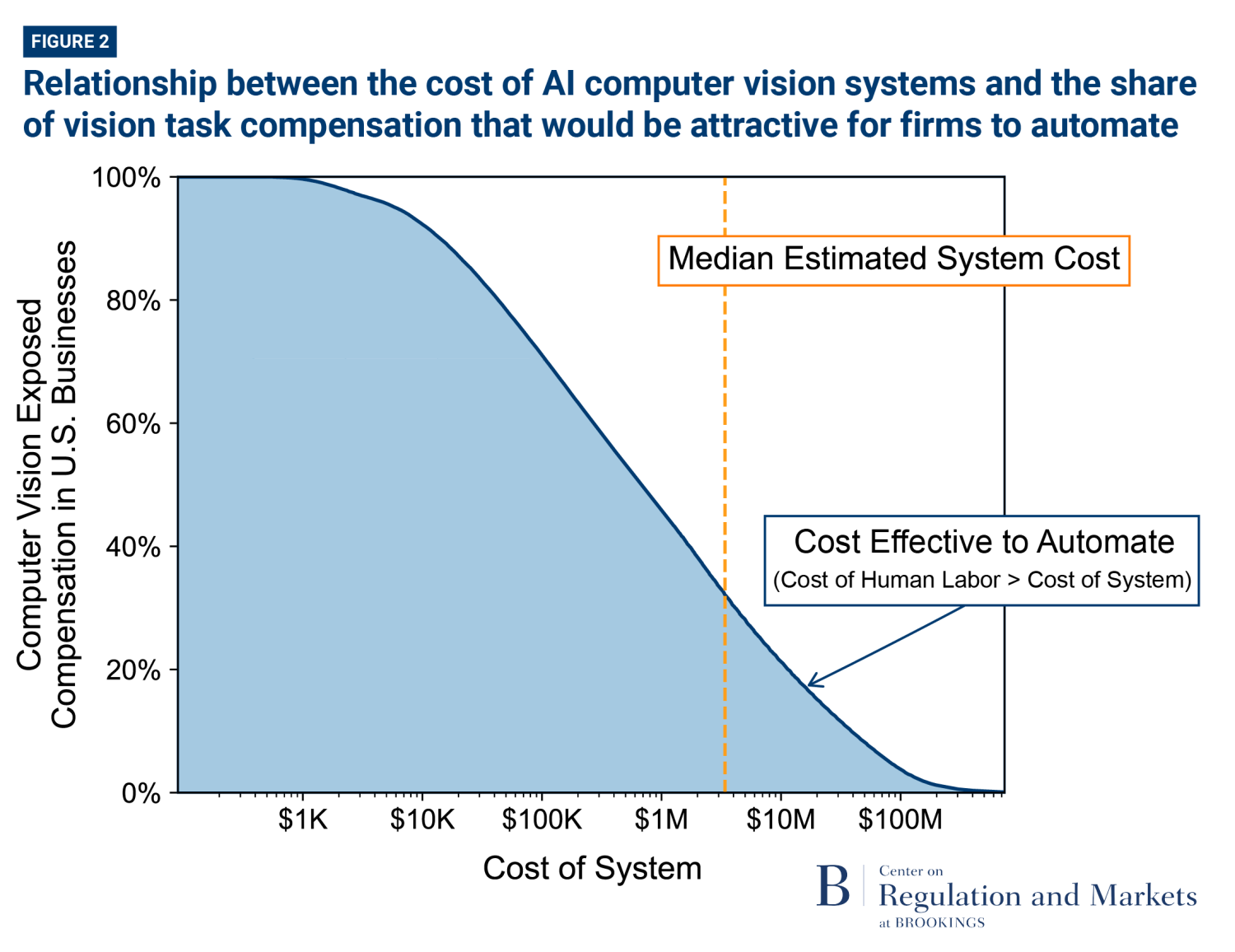

Among jobs in the U.S. non-farm business sector, 36% have at least one task exposed to computer vision. However, only 8% (23% of the exposed occupations) meet the threshold to have a least one task that is economically attractive to automate. But this is just a snapshot at one moment in time. Will this change as AI continues to evolve? In general, large changes in AI system cost are needed to substantially increase adoption. Figure 2 shows the share of computer vision task compensation that could be profitably replaced by an AI computer vision system of a given cost. Computer vision task compensation is the aggregate labor cost, including wage and benefit payments, for all computer vision tasks across all revelant occupations. For example, even if AI systems only cost $1,000, there would still be tasks that are not economically attractive to automate. These are tasks in occupations with low wages and that only involve a small portion of an occupation’s tasks or involve a small number of workers in a small firm. In contrast, there are other tasks where there are sufficient labor cost savings to justify AI systems costing $100 million. Such investments might be in tasks in high-wage occupations, tasks that represent a substantial portion of an occupation, or firms with many workers doing the same task. As Figure 2 shows, exponential decreases in cost are needed for linear increases in the share of tasks that are attractive to automate.

Paths to AI proliferation

The AI-labor cost framework suggests the proliferation of labor-replacing AI is limited. With current technology, many AI models and solutions are unappealing for firms to adopt. Consequently, the economic attractiveness—or lack of attractiveness—of AI systems raises important questions about the way in which work and production are organized in the economy. Whereas some tasks might be fully-automated, others may only be partially automated or not automated at all. This could vary across firms even for the same task. For example, a large firm might fully automate whereas a small firm might not. The possible differential raises a host of “general equilibrium” type questions about how industries organize work and production and how such organization might change due to AI proliferation.

For vision tasks, we find that most U.S. workers are in firms where almost none of the tasks are cost-effective to fully automate. With the current cost structure of AI solutions, even a firm of 5,000 employees could automate less than one tenth of their existing vision labor. Consequently, it is not surprising that AI adoption has, thus far, been limited. McElheran et al. (2024) find fewer than 6% of firms use AI-related technologies but that those that do are disproportionately large firms, representing 18% of employment.2

At the extreme end of the firm size spectrum, even a firm with more than a million workers lacks the scale to make automating the last 15% of their vision tasks attractive. Large incremental improvements in value creation would be needed to substantially change such a result. In fact, the notion that only a small number of very large firms have the necessary scale to find economic attractiveness in AI aligns with recent research by Bessen and Wang (2023). They find that just 250 firms accounted for 85% of U.S. private sector spending on in-house developed software from 2009 to 2018. Indeed, most firms, including many large ones, according to Bessen and Wang, regularly invest little in software and R&D.

Most firms lack the scale to cost-effectively develop computer vision. But, if labor costs can be aggregated across multiple firms, the attractiveness of automation becomes much greater. We estimate that if AI model providers deploy systems across whole industries and keep prices at competitive levels, then AI automation has an economic advantage for as much as 88% of vision task compensation.3 Consequently, business models offering industry-specific, AI-as-a-service platforms will likely be an important driver of AI automation. Examples of such platforms include a diamond classification tool built by NavTech (Thompson, 2021a) and a self-driving platform collaboration by NVIDIA (Thompson 2021b).

A second route to AI proliferation is through reductions in the cost of building AI systems. Since the key criterion for adoption is a comparison between human labor costs and AI system costs, cost decreases in deploying AI systems lead directly to greater adoption. While it may not be immediately obvious, nearly all AI progress happening today would fall under this “cost-saving” route to AI proliferation. This is because AI scaling laws imply that virtually all levels of performance could be achieved at some price. So, a system that achieves phenomenal performance could, in principal, be built. If it hasn’t, it means the cost of the data, compute, etc. are too high. When AI model builders improve training or deployment techniques, they are implicitly lowering the cost of achieving the needed performance levels. Put another way, the traditional view of progress—more performance for the same cost—can also be viewed as the same performance for lower cost when the full range of improvements are possible.

Extensive evidence shows that year-on-year cost improvements in many aspects of AI have been extremely rapid, including computing costs as GPU prices fall and as better algorithms are designed to make systems more efficient (Thompson, Greenewald, et al., 2021; Erdil et al., 2022; Ho et al., 2024). While these improvements are occurring rapidly, AI proliferation will be governed by the overall cost improvement. Hence, if data or personnel costs decrease more slowly than compute costs, then they will become the bottlenecks for many applications. These bottlenecks matter because exponential changes in cost are needed to generate linear changes in the share of tasks attractive to automate, as shown in Figure 2.

We find that the last mile problem in AI is substantial. Even the presence of off-the-shelf foundation models in a domain like computer vision does not stop the need for end-user customization. Because AI automation becomes more attractive with scale, we expect that many new AI platforms will emerge. Specifically, our AI-labor cost framework implies that it would be economically attractive to automate nearly four times as many computer vision tasks if AI solutions are deployed as platforms, that is, if firms buy AI-as-a-service.

Policy implications

Because the economics of AI presents similarities to those of earlier technologies, the last mile problem is not unique to AI. Communications services and other utilities have long faced similar challenges. For instance, substantial capital investment, low marginal cost, and new workforce skills create the need for scale and transformation in how work is done. Nonetheless, policy constructed from an earlier era is likely to be inadequate to address current and future needs. The new technology will require rethinking regulatory policy and workforce training programs along with new economic measurement initiatives and support for academic research.

Regulatory actions. The rise of AI-as-a-service platforms has antitrust implications and could require revised competition policy. Our research indicates that the substantial costs associated with developing AI systems can only be amortized through large-scale deployment. This is likely to cause substantial disintermediation of existing business models, similar to what was seen during the rise of the internet (Chircu & Kauffman, 1999). In many cases, this could create “natural monopolies” where the market can support only a single provider or cost advantages push the market towards monopolization. In the same way that competition policy has had to develop in the face of large digital platforms, it will need to develop to handle AI platforms (Coyle, 2019; Ong & Toh, 2023). Because AI platforms are so dependent on training data (natural or synthetic), questions about when data should be shared or restricted will need to be investigated.

Worker retraining programs. We find that about a quarter of vision tasks performed by workers are already attractive to automate. While much smaller than previous estimates, this still implies substantial labor market disruption as firms move to automate these tasks. To address the on-going labor market disruption from AI, it is imperative to implement retraining and reskilling programs proactively so that workers can shift into tasks that won’t be automated today or in the near future. Disseminating these predictions could also help alleviate unnecessary panic in the labor market and guide policymaking in employment, investment, and education.

Labor market data and measurement programs. In subsequent years, once the initial wave of automation occurs, we estimate that incremental automation falls to below the existing job destruction rate across a wide range of potential AI developments. This implies that the level of disruption is ambiguous. If AI job destruction is in addition to existing causes, it will represent a jump in disruption. If AI job destruction substitutes for other existing causes, labor disruption might be similar to today’s. Once the initial catch-up phase of automation is complete, the ongoing deployment and adoption pace will be set by the pace of cost decreases and platformization. To maximize the economic AI benefits, cost reductions and novel market structures, such as AI-as-a-service, are very likely required. Consequently, such radical labor market restructuring will require new data and measurement programs. Most economic statistics programs were designed for an earlier industrial era and are not well suited to measure the impact of AI and digital transformation.

Recent Census Bureau innovations have begun to address existing program shortcomings but much more remains to be done. For example, the Annual Integrated Economic Survey integrates seven existing surveys making business response easier, delivering more timely and higher quality data, and reducing Census Bureau costs. In addition, the recently created Business Trends and Outlook Survey measures business conditions and projections. Supplemental questions are designed to provide insight into how widespread AI use is. However, before these new programs can be further developed and matured, much more needs to be understood about industry-, sector-, and firm-level AI deployment and adoption.

Direct support of academic research. High costs of AI deployment can prevent automation throughout the economy. As Ahmed and Thompson (2023) show, with much greater access to the capital required for both basic research and product development, the technology industry has dominated the direction of AI research. In some cases, for example in the public sector or nonprofit world, there may be a strong public policy case that welfare could be improved through government action to develop or promote the development of models that advance publicly minded goals. For example, small health clinics might not individually have sufficient scale to justify the creation of AI models targeted to them, or medical researchers working on diseases affecting low-income patients might not have sufficient market to justify AI development. The challenge facing the academic sector is the resource intensive nature of AI research—large datasets, talented AI scientists, and enormous computing power. In these cases, incentives could be created to promote AI systems and data sharing, and academic researchers could be provided funding and/or computational resources to work on such projects. Without such intervention, the direction of AI technology will largely be in the hands of a few private companies.

Conclusion

The AI-labor cost framework created by us and our co-authors in Svanberg et al. (2023) provides the first end-to-end model of AI automation that evaluates the level of proficiency needed for a task, the cost of achieving that proficiency via labor or AI systems, and the economic decision by firms whether to adopt. For computer vision, where the cost estimates for AI systems are well developed, deployment at scale—likely among a small number of very large businesses—is economically attractive and should be expected in the years immediately ahead. Over time, AI system cost reduction and the scale at which they are deployed have the potential to increase automation. Scale can be gained by the formation of AI-as-a-service platforms and deployed broadly across industrial sectors.

In the years immediately ahead, large scale deployment will be most attractive, favoring deployment in large organizations with high-wage workers with many workers performing the same vision tasks. Over a longer time horizon, cost decreases and platformization will make automation more attractive for firms with low-wage tasks and fewer workers engaged in vision tasks. These firms will incrementally adopt as their incentives shift. So, from a policy perspective, near term worker retraining will need to target workers from larger organizations where automation is already attractive.

We expect the AI-labor cost framework that we and our coauthors developed to be useful beyond just computer vision. For example, it should be useful for understanding how LLMs that require customization will be integrated into the business practices of large organizations in the years immediately ahead. It may also be useful for other areas, such as prediction models used in supply chain management and employee turnover, which are reliant on proprietary and confidential data inside corporate firewalls and hence will require significant customization even in large organizations.

Our results point to a notably different path for job displacement resulting from AI automation than previously explored in the literature. We find that scale economies are crucial to making systems cost-effective and thus to determining their deployment and adoption. We also find that the medium-term pace of job displacement—at least from computer vision—is likely more in-line with traditional job churn and more amenable to traditional policy interventions.

Because AI system development costs could be offset with deployment across many firms, significant industrial organization challenges need to be solved as business leaders fund and launch new business models. If these industrial organization challenges are met, a major restructuring of industries should be expected as tasks are separated from firm operations to third-party providers.

-

References

Acemoglu, D., Autor, D., Dorn, D., Hanson, G.H., & Price, B. (2016). Import Competition and the Great U.S. Employment Sag of the 2000s. Journal of Labor Economics, 34(S1, Part 2), S141-S198.

Ahmed, N. and Thompson N.C. (2023, December 5). What Should Be Done About the Growing Influence of Industry in AI Research. Brookings. https://www.brookings.edu/articles/what-should-be-done-about-the-growing-influence-of-industry-in-ai-research/

Autor, D., Chin, C., Salomons, A., and Seegmiller B. (2023). New Frontiers: The Origins and Content Of New Work, 1940–2018. Quarterly Journal of Economics 139(3). https://doi.org/10.1093/qje/qjae008

Bessen, J., & Wang, X. (2023). The Intangible Divide: Why do so few firms invest in innovation? [Unpublished manuscript].

Bonney, K., Breaux, C., Buffington, C., Dinlersoz, E., Foster, L. S., Goldschlag, N., Haltiwanger, J. C., Kroff, Z., & Savage, K. (2024). Tracking Firm Use of AI in Real Time: A Snapshot from the Business Trends and Outlook Survey (No. w32319). National Bureau of Economic Research. https://www.nber.org/papers/w32319

Brynjolfsson, E. (2022). The Turing Trap: The Promise & Peril of Human-Like Artificial Intelligence. Daedalus, 151(2), 272–287. https://doi.org/10.1162/daed_a_01915

Chircu, A. M., and Kauffman, R. J. (1999). Strategies for Internet Middlemen in the Intermediation/Disintermediation/Reintermediation Cycle. Electronic Markets, 9(1-2), 109-117.

Coyle, D. (2019). Practical Competition Policy Implications of Digital Platforms. Antitrust Law Journal, 82(3), 835-860. https://www.jstor.org/stable/27006776

Eloundou, T., Manning, S., Mishkin, P., & Rock, D. (2023). GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models (arXiv:2303.10130). arXiv. http://arxiv.org/abs/2303.10130

Erdil, E., & Besiroglu, T. (2023). Algorithmic progress in computer vision (arXiv:2212.05153). arXiv. http://arxiv.org/abs/2212.05153

Ho, A., Besiroglu, T., Erdil, E., Owen, D., Rahman, R., Guo, Z. C., Atkinson, D., Thompson, N., & Sevilla, J. (2024). Algorithmic progress in language models (arXiv:2403.05812). arXiv. http://arxiv.org/abs/2403.05812

Meslej, N., Fattorini, L., Perrault, R., Parli, V., Reuel, A., Brynjolfsson, E., Etchemendy, J., Ligett, K., Lyons, T., Manyika, J., Niebles, J.C., Shoham, Y., Wald, R., & Clark, J. (2024). The AI Index 2024 Annual Report. AI Index Steering Committee, Institute for Human-Centered AI, Stanford University. https://aiindex.stanford.edu/wp-content/uploads/2024/04/HAI_2024_AI-Index-Report.pdf

McElheran, K., Li, J. F., Brynjolfsson, E., Kroff, Z., Dinlersoz, E., Foster, L., & Zolas, N. (2024). AI adoption in America: Who, what, and where. Journal of Economics & Management Strategy. 33(2). https://doi.org/10.1111/jems.12576

Mensink, T., Uijlings, J., Kuznetsova, A., Gygli, M., & Ferrari, V. (2022). Factors of Influence for Transfer Learning Across Diverse Appearance Domains and Task Types. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12), 9298–9314. https://doi.org/10.1109/TPAMI.2021.3129870

Ong, B., and D.J. Toh (2023). Digital Dominance and Social Media Platforms: Are Competition Authorities Up to the Task? International Review of Intellectual Property and Competition Law, 54, 527–572. https://doi.org/10.1007/s40319-023-01302-1

Svanberg, M., Li, W., Fleming, M., Goehring, B., & Thompson, N. (2024). Beyond AI Exposure: Which Tasks are Cost-Effective to Automate with Computer Vision? Available at SSRN 4700751. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4700751

Thompson, N.C. (2021a). Navtech: The new platforms being created by deep learning [Unpublished manuscript]. http://www.neil-t.com/wp-content/uploads/2022/01/Navtech-case-study-2021-12-14.pdf

Thompson, N.C. (2021b). NVIDIA: Building a Compute and Data Platform for Self-Driving Cars [Unpublished manuscript] http://www.neil-t.com/wp-content/uploads/2022/01/NVIDIA-case-study-2021-12-14.pdf

Thompson, N., Fleming, M., Tang, B. J., Pastwa, A. M., Borge, N., Goehring, B. C., & Das, S. (2024). A Model for Estimating the Economic Costs of Computer Vision Systems that use Deep Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 38(21), 23012-23018). https://ojs.aaai.org/index.php/AAAI/article/view/30343

Thompson, N.C., Fleming, M., Das, S., Goehring, B., Borge, N.J. (2022). Where is it Cost Effective To Deploy AI: MIT-IBM Industry Showcase.

Thompson, N. C., Greenewald, K., Lee, K., & Manso, G. F. (2021). Deep learning’s diminishing returns: The cost of improvement is becoming unsustainable. IEEE Spectrum, 58(10), 50-55.

U.S. Bureau of Labor Statistics (2022). Occupational Employment and Wage Statistics. Retrieved July 17, 2023, from https://www.bls.gov/oes/

U.S. Census Bureau (2021) 2021 Business Dynamics Statistics. Retrieved December 20, 2023, from https://www.census.gov/programs-surveys/bds.html

U.S. Department of Labor (2023). O*NET. Retrieved April 4, 2023, from https://www.onetcenter.org/overview.html

-

Acknowledgements and disclosures

MIT’s FutureTech Research Project is funded by grants from Open Philanthropy, the National Science Foundation, Accenture, IBM, the MIT-Air Force AI accelerator, and the MIT Lincoln Laboratory. Fleming is the former Chief Revenue Scientist at Varicent and has served as a paid technology consultant to the Bureau of Economic Analysis and the Project Management Institute. The authors did not receive financial support from any firm or person for this article or, other than the aforementioned, from any firm or person with a financial or political interest in this article. Other than the aforementioned, the authors are not currently an officer, director, or board member of any organization with a financial or political interest in this article.

-

Footnotes

- See Svanberg et al. (2024) for more discussion of why this is the critical cutoff.

- Similarly, in the fourth quarter of 2023 and the first quarter of 2024, mid-single digit AI penetration is also found by Bonney et al. (2024). Across a select subset of industries and business functions, Meslej et al. (2024) found 15% average AI adoption in 2023.

- The cost estimate, making the automation of 88% of vision task compensation economically attractive includes only system development expense and excludes, sales, general and administrative expense.